Ph D,

shuchang [dot] zhou [at] gmail.com

I graduated from Tsinghua University in 2004 and obtained my PhD from the Chinese Academy of Sciences.

|

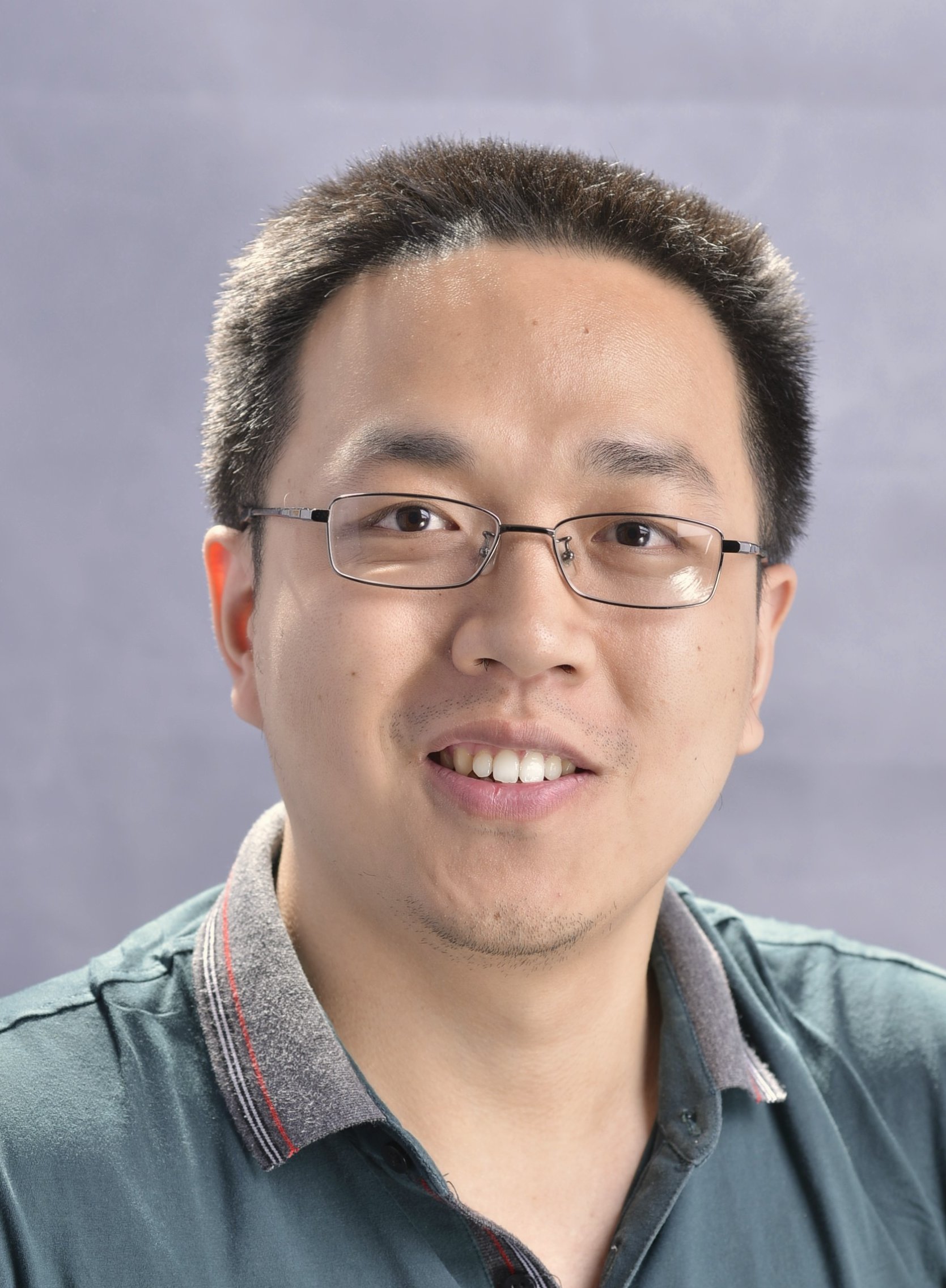

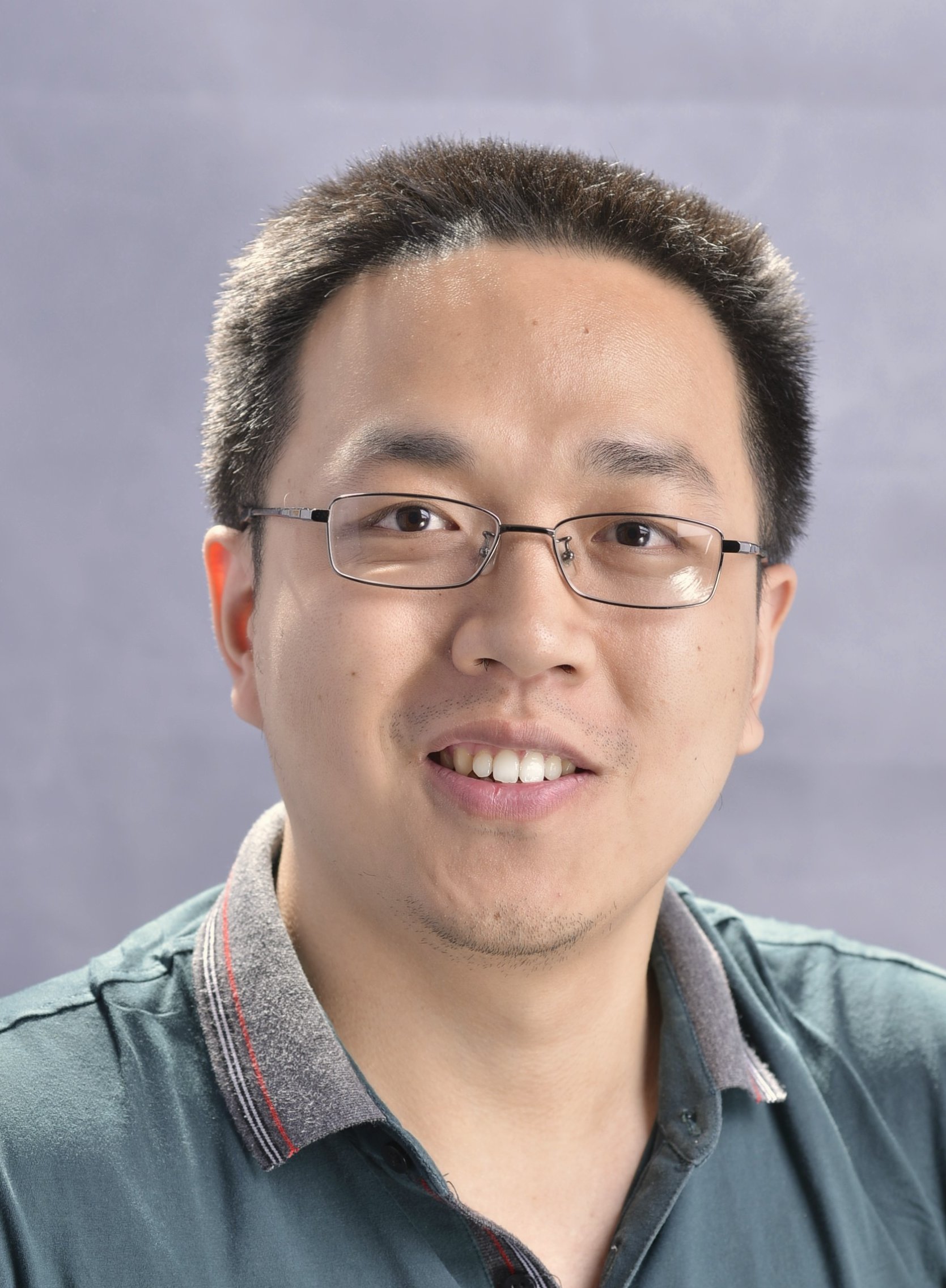

ZHOU Shuchang (周舒畅)

Ph D, shuchang [dot] zhou [at] gmail.com I graduated from Tsinghua University in 2004 and obtained my PhD from the Chinese Academy of Sciences. |